Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Would you like to insight in your inbox? Register for our weekly newsletters to only receive the company manager of Enterprise AI, data and security managers. Subscribe now

The “Qwen team” by Chinese e-commerce giant Alibaba did it again.

Only days after the free and with open source license What is the top that does the large voice model (LLM) that has not been implemented in the world -Full station, even compared to proprietary AI models of well-financed US laboratories such as Google and Openai-in form of elongated names QWen3-235b-A22B-25507This group of AI researchers has published another blockbuster model.

That means QWen3-cooder-480b-A35BPresent a new one Open source LLM focused on the support in software development. It is designed for complex, multi -stage coding workflows and can create full -fledged, functional applications in Seconds Or minutes.

The model is positioned in such a way that you compete with proprietary offers such as Claude Sonnet-4 in agent coding tasks and set new benchmark reviews between open models.

It’s available on HugPresent GirubPresent Qwen chatabove Alibabas Qwen APIAnd a growing list of vibe coding and AI tool platforms from third-party providers.

But in contrast to Claude and other proprietary models, QWen3 code, which it calls it, is now available under one Open Source Apache 2.0 licenseThis means that it is free of charge for every company to take over, change, change and use in their commercial applications for employees or end customers without paying alibaba or other others.

It is also so high in benchmarks of third-party providers and the anecdotal use in AI power users for “vibe coding” coding with natural language and without formal development processes and steps, at least one. LLLM researcher Sebastian Rakkawrote that about X: “This may be the best coding model so far. General accommodation is cool, but if you want to have the best in coding, specialize wins are not a free lunch.”

Developers and companies that are interested in downloading him Hug.

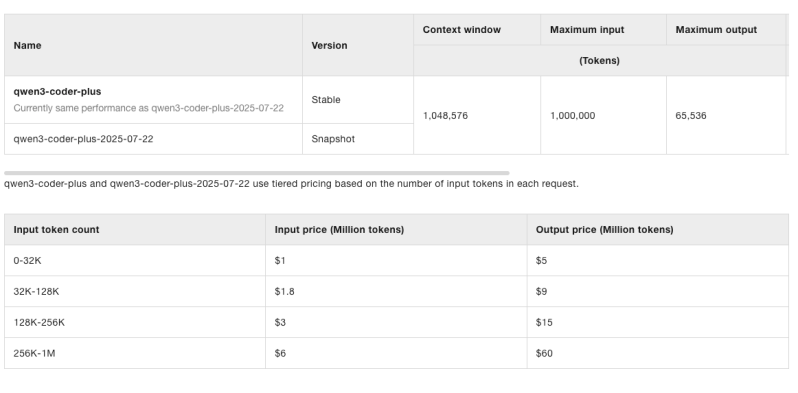

Companies that do not want to record the model alone or through various cloud inferior providers of third-party providers can also use it directly Through the Alibaba Cloud Qwen APIWhere the cost per million is at 1/5 USD per million tokens (MTOK) for input/output of up to 32,000 tokens, then $ 1.8/9 US dollars for up to $ 128,000, $ 3/15 for up to $ 256,000 and $ 6/60.

According to the documentary published by QWen Team online, QWen3-cover is a mixture mixture mixture (Mie-of-experts) with 480 billion total parameters, 35 billion active per query and 8 active experts of 160.

It supports 256 -kk -token context native, whereby the extrapolation up to 1 million tokens using yarn (another rope rextrapolation using the context length of a voice model expands the rotation positioning (rope) used during the attention calculation.

It was developed as a causal voice model and has 62 levels, 96 attention heads for queries and 8 for key value pairs. It is optimized for token efficient

QWen3 code has achieved a leading performance in open models in several agent assessment suites:

The model also evaluates competitive via tasks such as agent browser use, multilingual programming and using tools. Visual benchmarks show a progressive improvement about training literations in categories such as codegen, SQL programming, code processing and instructions.

In addition to the model, Qwen has the open sourcing QWen code, a Cli tool that was made from Gemini code. This interface supports the function call and structured request, so that QWen3 code in coding workflows into the integration of QWen3 cooders becomes easier. QWen code supports Node.js environments and can be installed via NPM or from source.

QWen3 code also integrates into developer platforms such as:

Developers can do QWen3 coders locally or establish a connection via Openai-compatible APIs using endpoints that are hosted on Alibaba cloud.

In addition to preparing for 70% code (70% code), QWen3 coders benefit from advanced night training techniques:

This phase simulates real software engineering challenges. To activate this, QWen built a 20,000-environment system in Alibaba Cloud and offered the scale that is required for the evaluation and training of models for complex workflows as in SWE-Bench.

For companies, QWen3 code offers an open, top-class alternative to closed proprietary models. With strong results when coding the execution and the long context thinking, it is particularly relevant for:

The support for long contexts and modular provision options in various development environments makes QWen3 coders a candidate for AI pipelines for production qualities in large tech companies and smaller engineering teams.

To optimally use QWen3 code, Qwen recommends:

APIS and SDK examples are provided using Openai-compatible Python clients.

Developers can define user-defined tools and have QWen3 coders dynamically accessed during the conversation or code of code.

Initial answers to QWen3-cooder-480B-A35B-Instruct were significantly positive among AI researchers, engineers and developers who tested the model in real coding workflows.

Wolfram Ravenwolf, a AI engineer and viewer at Ellamindai, shared his experience with Raschkas Lofty above, but also his experience Integration of the model in Claude Code on XIndication, “This is certainly the best at the moment.”

After Ravenwolf tested several integration poxies, he finally said with Litellm his own to ensure optimal performance, and the model of the model to practical practitioners who focus on the toolchain adaptation.

Pedagogue and AI Tinkerer Kevin Nelson also has xgas X After using the model for simulation tasks.

“Qwen 3 Coder is on a different level” He posted and found that the model was not only executed on the scaffolding, but even a message embedded in the edition of the simulation – an unexpected but welcome sign for the awareness of the model for the task context.

Even Twitter co-founder and Square (now referred to as a “block”), Jack Dorsey published an X message about the praise of the model. Write: “Geese + Qwen3 coder = wow,”With regard to the open source ai agent framework goose of his block Venturebeat covered in January 2025.

These answers indicate that QWEN3 code with a technically versed user-based performance, adaptability and deeper integration with existing development stacks is resonance.

While this publication focuses on the most powerful variant, QWen3-cooder-480b-A35B instruct, the QWen team indicates that there are additional model sizes in development.

These are intended to offer similar skills with lower provision costs and expand accessibility.

Future work also includes research into self -improvement, since the team examines whether agent models can refine its own performance through real use.